AllResolvedThe Americas

The USA and WWI: Nationalism, Imperialism, and Militarism

During World War I, public opinion shifted against Germany. With the Zimmerman note and Germany's practice of unrestricted submarine warfare, the USA was pushed to officially declare war on April 6, 1917.

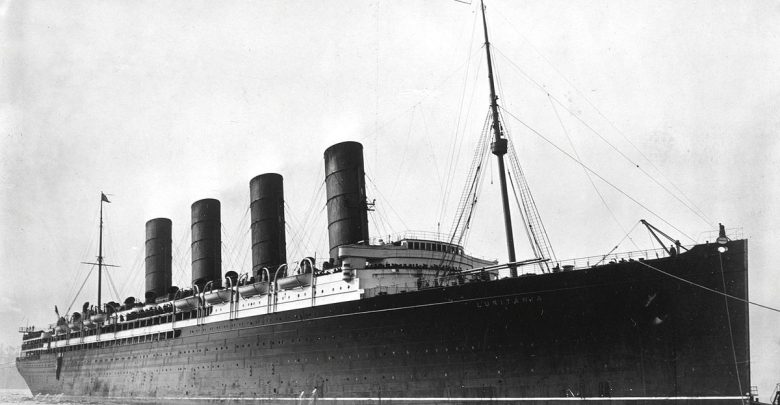

The United States of America did not officially involve itself in World War I when it began on July 28, 1914. The USA wanted to make itself out to be a neutral party in the world events, as it did with other European wars. This changed on May 7, 1915, when a German U-boat sunk the RMS Lusitania, killing 128 Americans. Public opinion shifted against Germany, and, with the Zimmerman note and Germany’s practice of unrestricted submarine warfare, pushed the USA to officially declare war on April 6, 1917.

The USA and Nationalism

Nationalism is a system of social, economic, and political of advocacy for the sovereignty of a particular nation, often above all other nations, and the USA was not immune to it. The USA has always had nationalistic tendencies; some of it is how the country has remained strong. Once the Lusitania was sunk in 1915, nationalism started to grow. Propaganda was employed to turn the public towards Britain as a good guy. Sound recordings and movies were used to negatively portray Germany.

The Zimmerman note that was decoded in 1917 fueled nationalism even more. The note was from Germany to Mexico, and it said that Germany would help Mexico recover Arizona, California, New Mexico, and Texas should war with the USA come to be. When the note was released to the public in March, hate against Germany was strong and American pride was stronger. By the end of April, the USA had entered The Great War.

The USA and Imperialism

Imperialism is the extension of one country’s power and influence into a different territory through diplomatic or military means. Especially in this era, the USA was a imperialistic nation. Territories were spread all over the world, and the war threatened those territories. WWI also posed potential gains for the USA; although, the USA gained no new land.

WWI promised the USA more influence. President Woodrow Wilson tried to take advantage of this influence with the Treaty of Versailles after the war, though the treaty was mostly a failure. The USA’s territories were able to see the strength of the country, which gained some support. The threats to territory and the potential gains helped guide the USA into WWI.

The USA and Militarism

Militarism is the idea that a country should have, maintain, and be willing to use a large and strong military. First, the militarism of Germany encouraged the USA to declare war. By many, German militarism was perceived as a threat to the American way of life, a perception that was further fueled by things like the Zimmerman note and unrestricted submarine warfare.

The USA itself was militaristic largely due to imperialism. The USA joined the war at a critical time for the Allies (Britain, France, Russia, and Italy). The Allies desperately needed troops that the USA was able to provide. The USA also instituted the draft and continued to grow its military during and after the war. World War I heavily influenced the growth and spending on the USA’s military.

In the End

The Great War ended on November 11, 1918. The Treaty of Versailles officially ended the warring, but it was never ratified by the US Congress. The treaty initially worked, but Germany felt as if it had been treated unfairly. This, along with the Great Depression, ultimately plummeted the world into the Second World War. Though less direct, militarism, imperialism, and nationalism continued to influence the USA’s behavior during World War II.